Don't miss interesting news

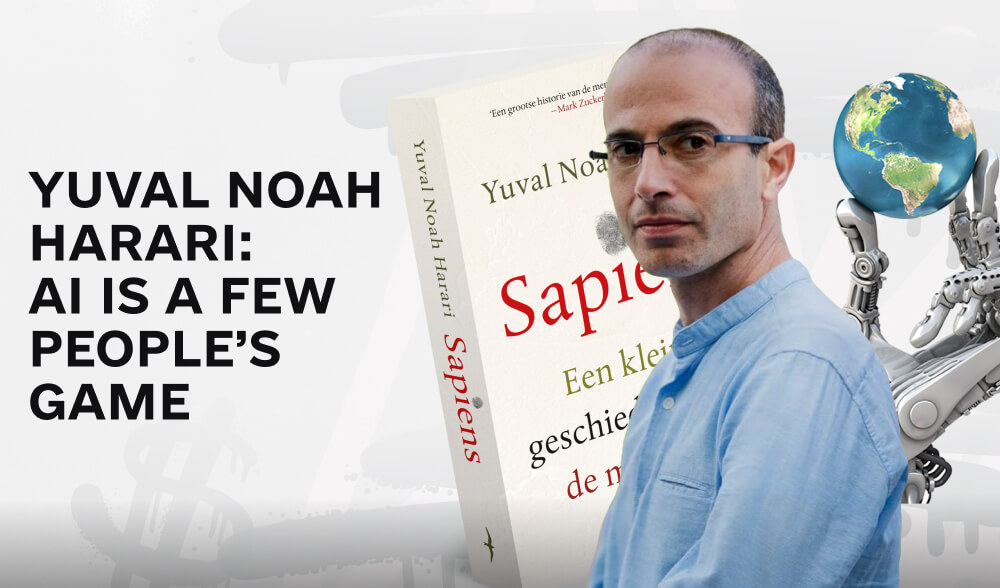

Yuval Noah Harari (YNH), a renowned historian and best-selling author, shares his thoughts on the challenges and risks associated with regulating artificial intelligence (AI) in a conversation with a journalist.

Journalist (J): Some large companies, such as OpenAI or Microsoft, are actively advocating for AI regulation. But isn’t this an attempt to strengthen their positions? After all, it will be harder for small companies to compete if they need expensive certifications, lawyers, and large teams to operate. We have already seen how GDPR has only increased the influence of tech giants. Won’t it happen again with AI?

JUNH: You are absolutely right, this is an important question. At the moment, the development of powerful AI models already requires significant resources: money, data, and experts. In fact, it is a game for several players, and globally, only a few countries are leading this revolution. This is very dangerous, as the situation may repeat the scenario of the Industrial Revolution, when advanced countries began to exploit the rest of the world.

Today, thanks to AI, it is possible to “capture” a country without even sending soldiers there. It is enough to access its data and control processes remotely. AI regulation should take into account not only national interests but also global balance. What should countries that are not involved in the AI race but will suffer from its impact do? After all, the technology will affect everyone.

G: Let’s go back to the Industrial Revolution. Back then, for example, electricity was not regulated as such, but only its use. Wouldn’t it be logical to do the same with AI, regulating only its application, not the technology itself?

YUNH: AI is not like electricity, but rather something more autonomous, like people. It can make decisions and create new ideas. Its potential for political, economic, and cultural change is enormous.”

Some laws can be adapted to the digital age. For example, the principle of “do not steal”. Previously, tech giants claimed that this law did not apply to data. They took your data, manipulated it, sold it, and it was not considered stealing. But this needs to be changed: if in the real world you can’t steal someone’s crops, then in the digital world you can’t take data without permission.”

Another example is the fight against fake people. In the past, it was technically impossible, but now billions of fake identities can be created. If this is allowed, society will lose trust, which will be a disaster for democracy. We need tough laws: there should be serious liability for creating fake people or platforms that allow this.”

G: What about cases where the creation of fakes is innocent? For example, my son and I created several fake characters for a game.

YUNH: The problem is not in the creation, but in the way it is used. If you are interacting with AI, for example, a doctor-bot, it is important that you are clearly informed: “This is not a real doctor, but AI”. Transparency is the key to solving this issue.

G: Okay, but what about social media? What if people use AI to shape their responses in discussions?

YUNH:This is acceptable if a person takes responsibility for what they post. The problem arises when platforms are filled with bots that generate content in incredible volumes. Imagine a platform where the majority of messages are created not by humans, but by millions of bots that write better, more convincingly, and more personalized than humans. In such a world, democracy becomes incapacitated.

To preserve a democratic society, it is necessary to prevent a situation where public dialog is completely hijacked by bots.

NT: Are you saying that if I have my model of large-scale language intelligence that I developed with my team of programmers, I should allocate 20% of that team to security and report to a certain authority on the performance of that task?

YNH: Just like when you build a car, you have people working on making it as good as it can be, but you also have people working on security. Because even if you don’t have your own ethical principles, you know that no government will allow your car to go on the road if it’s unsafe.

NT: That’s compelling, and it’s a great idea. But let’s go back to social media. For example, Facebook or Twitter in 2012. They could have said that 20% of their employees are engaged in security: removing inappropriate content, looking for terrorists, etc. But how could a government predict in advance their impact on democracy in a few years?

YNH: I don’t think anyone can predict the consequences in 5 or 10 years. However, regulators working with AI should learn to react quickly to changes. For example, the damage from social media algorithms was noticed back in 2016-2017, for example, in Myanmar. People signaled the danger, but corporations did not respond. If all the talent is concentrated in private companies that develop technology, it’s a lost battle. But if we channel some of that talent into government agencies or NGOs that will oversee security, including social and psychological security, then the situation will improve.

NT: But doesn’t this give big companies an advantage because they can afford to comply with strict regulations, while newcomers cannot?

YNH: This is a serious question. But already now, the resources required to develop powerful AI models are so great that it is a game for a few. And globally, it is only a few countries. During the Industrial Revolution, a few countries used their advantage to exploit others. Now, with AI, this can happen again. After all, you don’t need to send soldiers to dominate – you just need to control the data. Regulation should take into account not only national interests but also global justice.

NT: Maybe AI is more like electricity than trains, and it’s not AI itself that should be regulated, but its use?

YNH: No, AI is even more powerful than trains. It can make decisions on its own and create new ideas. Its impact on politics, economics, and culture is enormous. We can apply old laws to AI. For example, the law “thou shalt not steal”. The fact that companies took data without permission is theft.

Similarly, the world has always had strict laws against counterfeiting, as it threatened the financial system. Now, for the first time, we can create “fake people” – billions of bots that are indistinguishable from real people. If we allow this, trust in society will collapse, and democracy will collapse with it. We need strict laws against fake people. If a platform allows ‘fake people’, it should be punished severely.”

NT: But is it possible to relax these rules a bit for personal projects, for example, when I create avatars with my child for fun?

YNH: No one forbids creating avatars, but they cannot be impersonated by real people. Interacting with AI, for example, as a doctor, can be very useful, but a person should clearly know that it is not a real doctor, but AI.

This discussion reminds us that regulating AI is not just a technical challenge, but an ethical challenge that will shape the face of the future. How we use new technologies – to enrich humanity or to create even greater inequalities – depends on our decisions today. AI should not become a tool of chaos, but a key to a new era where humanity will be confident in its future, not afraid of it.